Latest Posts

See what's new

An Introduction to Autoencoders and Variational Autoencoders

On February 13, 2022 In Computer Vision, Deep Learning, Machine Learning

What is an Autoencoder? An autoencoder is a neural network trained to compress its input and recreate the original input from the compressed data. This procedure is useful in applications such as dimensionality reduction or file compression where we want to store a version of our data that is more memory efficient or reconstruct

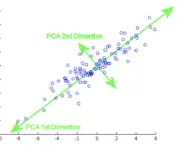

Principal Components Analysis Explained for Dummies

On January 17, 2022 In Classical Machine Learning, Machine Learning

In this post, we will have an in-depth look at principal components analysis or PCA. We start with a simple explanation to build an intuitive understanding of PCA. In the second part, we will look at a more mathematical definition of Principal components analysis. Lastly, we learn how to perform PCA in Python. What

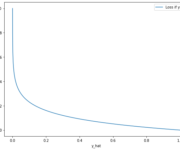

An Introduction to Neural Network Loss Functions

On September 28, 2021 In Deep Learning, Machine Learning

This post introduces the most common loss functions used in deep learning. The loss function in a neural network quantifies the difference between the expected outcome and the outcome produced by the machine learning model. From the loss function, we can derive the gradients which are used to update the weights. The average over

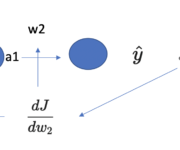

Understanding Backpropagation With Gradient Descent

On September 13, 2021 In Deep Learning, Machine Learning

In this post, we develop a thorough understanding of the backpropagation algorithm and how it helps a neural network learn new information. After a conceptual overview of what backpropagation aims to achieve, we go through a brief recap of the relevant concepts from calculus. Next, we perform a step-by-step walkthrough of backpropagation using an

What is the Liskov Substitution Principle: An Explanation with Examples in Java

On September 18, 2022 In Design Patterns, Software Design

In this post, we will understand the Liskov substitution principle and illustrate how it works with an extended example in Java. The Liskov substitution principle states that an object of a superclass should be replaceable with an object of any of its subclasses. It is one of the SOLID design principles in object-oriented software

What is the Open/Closed Principle: An Explanation with Examples in Java

On August 17, 2022 In Design Patterns, Software Design

The open-closed principle states that classes and modules in a software system should be open for extension but closed for modification. It is one of the SOLID principles for software design.In this post, we will build a step-by-step understanding of the open/closed principle using examples in Java. Why Should You Apply the Open/Closed Principle?

The Single Responsibility Principle

On June 19, 2022 In Design Patterns, Software Design

What is the Single Responsibility Principle? The SRP is often misinterpreted to stipulate that a module should only do one thing. While designing functions only to have one purpose is good software engineering practice, it is not what the single responsibility principle states. In a nutshell, the single responsibility principle is one of the

What is the Best Math Course for Machine Learning

On June 7, 2022 In Deep Learning, Machine Learning, Mathematics for Machine Learning

Learning the required mathematics is often perceived as one of the biggest obstacles by people trying to get started in machine learning. Mathematical concepts from linear algebra, statistics, and calculus are foundational to many machine learning algorithms. Luckily, the past several years have seen the proliferation of several online courses and other learning resources.

What is the Sliding Window Algorithm?

On May 29, 2022 In Algorithms, Computer Vision

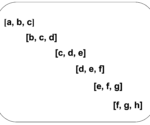

The sliding window algorithm is a method for performing operations on sequences such as arrays and strings. By using this method, time complexity can be reduced from O(n3) to O(n2) or from O(n2) to O(n). As the subarray moves from one end of the array to the other, it looks like a sliding window.

Deep Learning Architectures for Object Detection: Yolo vs. SSD vs. RCNN

On May 22, 2022 In None

In this post, we will look at the major deep learning architectures that are used in object detection. We first develop an understanding of the region proposal algorithms that were central to the initial object detection architectures. Then we dive into the architectures of various forms of RCNN, YOLO, and SSD and understand what

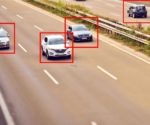

Foundations of Deep Learning for Object Detection: From Sliding Windows to Anchor Boxes

On May 12, 2022 In Computer Vision, Deep Learning, Machine Learning

In this post, we will cover the foundations of how neural networks learn to localize and detect objects. Object Localization vs. Object Detection Object localization refers to the practice of detecting a single prominent object in an image or a scene, while object detection is about detecting several objects. A neural network can localize

How to Learn Machine Learning: A Guide for Self-Starters

On April 26, 2022 In Machine Learning

Machine learning has emerged as one of the hottest technology trends. Salaries for skilled machine learning engineers are through the roof, and many companies are unable to fill open positions in the field. Moving into machine learning, therefore, can be a very promising career move. But how difficult is it to get a job

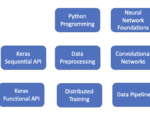

How to Learn TensorFlow Fast: A Learning Roadmap with Resources

On April 21, 2022 In Deep Learning, Machine Learning

TensorFlow is one of the two dominant deep learning frameworks. It is heavily used in industry to build cutting-edge AI applications. While its rival PyTorch has seen an increase in popularity over recent years, TensorFlow is still the dominant framework in industry applications. Most machine learning engineers, especially deep learning engineers, are well-advised to

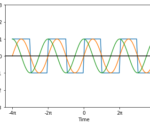

The Fourier Transform and Its Math Explained From Scratch

On April 12, 2022 In Mathematics for Machine Learning

In this post we will build the mathematical knowledge for understanding the Fourier Transform from the very foundations. In the first section we will briefly discuss sinusoidal function and complex numbers as they relate to Fourier transforms. Next, we will develop an understanding of Fourier series and how we can approximate periodic functions using