Classical Machine Learning Archive

Principal Components Analysis Explained for Dummies

On January 17, 2022 In Classical Machine Learning, Machine Learning

In this post, we will have an in-depth look at principal components analysis or PCA. We start with a simple explanation to build an intuitive understanding of PCA. In the second part, we will look at a more mathematical definition of Principal components analysis. Lastly, we learn how to perform PCA in Python. What

Understanding Hinge Loss and the SVM Cost Function

On August 22, 2021 In Classical Machine Learning, Machine Learning, None

In this post, we develop an understanding of the hinge loss and how it is used in the cost function of support vector machines. Hinge Loss The hinge loss is a specific type of cost function that incorporates a margin or distance from the classification boundary into the cost calculation. Even if new observations

What is a Support Vector?

On August 17, 2021 In Classical Machine Learning, Machine Learning

In this post, we will develop an understanding of support vectors, discuss why we need them, how to construct them, and how they fit into the optimization objective of support vector machines. A support vector machine classifies observations by constructing a hyperplane that separates these observations. Support vectors are observations that lie on the

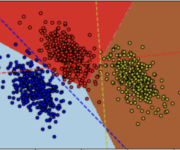

What is a Kernel in Machine Learning?

On August 11, 2021 In Classical Machine Learning, Machine Learning

In this post, we are going to develop an understanding of Kernels in machine learning. We frame the problem that kernels attempt to solve, followed by a detailed explanation of how kernels work. To deepen our understanding of kernels, we apply a Gaussian kernel to a non-linear problem. Finally, we briefly discuss the construction

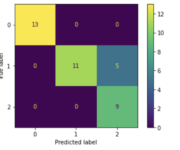

Logistic Regression in Python

On May 7, 2021 In Classical Machine Learning, Machine Learning

In this post, we are going to perform binary logistic regression and multinomial logistic regression in Python using SKLearn. If you want to know how the logistic regression algorithm works, check out this post. Binary Logistic Regression in Python For this example, we are going to use the breast cancer classification dataset that comes

The Softmax Function and Multinomial Logistic Regression

On May 4, 2021 In Classical Machine Learning, Machine Learning, None

In this post, we will introduce the softmax function and discuss how it can help us in a logistic regression analysis setting with more than two classes. This is known as multinomial logistic regression and should not be confused with multiple logistic regression which describes a scenario with multiple predictors. What is the Softmax

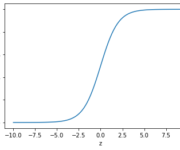

The Sigmoid Function and Binary Logistic Regression

On May 3, 2021 In Classical Machine Learning, Machine Learning, None

In this post, we introduce the sigmoid function and understand how it helps us to perform binary logistic regression. We will further discuss the gradient descent for the logistic regression model (logit model). In linear regression, we are constructing a regression line of the form y = kx + d. Within the specified range,

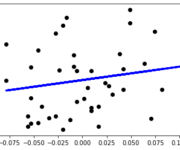

Linear Regression in Python

On April 28, 2021 In Classical Machine Learning, Machine Learning

This post is about doing simple linear regression and multiple linear regression in Python. If you want to understand how linear regression works, check out this post. To perform linear regression, we need Python’s package numpy as well as the package sklearn for scientific computing. Furthermore, we import matplotlib for plotting. Simple Linear Regression

The Coefficient of Determination and Linear Regression Assumptions

On April 26, 2021 In Classical Machine Learning, Machine Learning

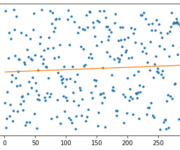

We’ve discussed how the linear regression model works. But how do you evaluate how good your model is. This is where the coefficient of determination comes in. In this post, we are going to discuss how to find the coefficient of determination and how to interpret it. What is the Coefficient of Determination? The

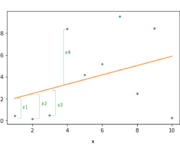

Ordinary Least Squares Regression

On April 25, 2021 In Classical Machine Learning, Machine Learning

In this post, we are going to develop a mathematical understanding of linear regression using the most commonly applied method of ordinary least squares. We will use linear algebra and calculus to demonstrate why the least-squares method works. For a simple, intuitive introduction to regression that doesn’t require advanced math, check out the previous

- 1

- 2