Conjugate Priors

In this post we introduce the concept of conjugate priors and how they enable us to infer posterior parameters from prior distributions.

The real world is messy and the probability of events is usually influenced by environmental factors. In statistics, we have methodologies for dealing with uncertainty and environmental influences such as the concept of prior probabilities in Bayesian inference, and maximum likelihood estimation.

In practical applications, we can account for environmental influences by assuming a prior probability distribution. Once we’ve seen the data for our problem at hand, we can update the prior with our new knowledge derived from the data. This gives us the posterior.

While this sounds straightforward, there are several roadblocks.

How do we know what prior distribution is appropriate for our problem? And how can we calculate the posterior from the chosen prior?

The prior expresses our knowledge of the problem before we have seen any data. This statement is quite vague. For practical applications, we commonly pick a prior that is computationally convenient. A prior conjugate to the likelihood function is computationally convenient because it is of the same form as the posterior.

To understand why this is helpful, let’s deal with the second question. To get to the posterior from our prior, we can use Bayes’ theorem. In a nutshell, Bayes theorem says that the posterior is equal to the likelihood times the prior divided by a normalization term.

posterior = \frac{likelihood \times prior}{normalization \; constant} Since the normalization term is a constant, it does not depend on the parameters that define the prior and the likelihood. Accordingly, the posterior is proportional to the likelihood times the prior.

posterior \propto likelihood \times prior

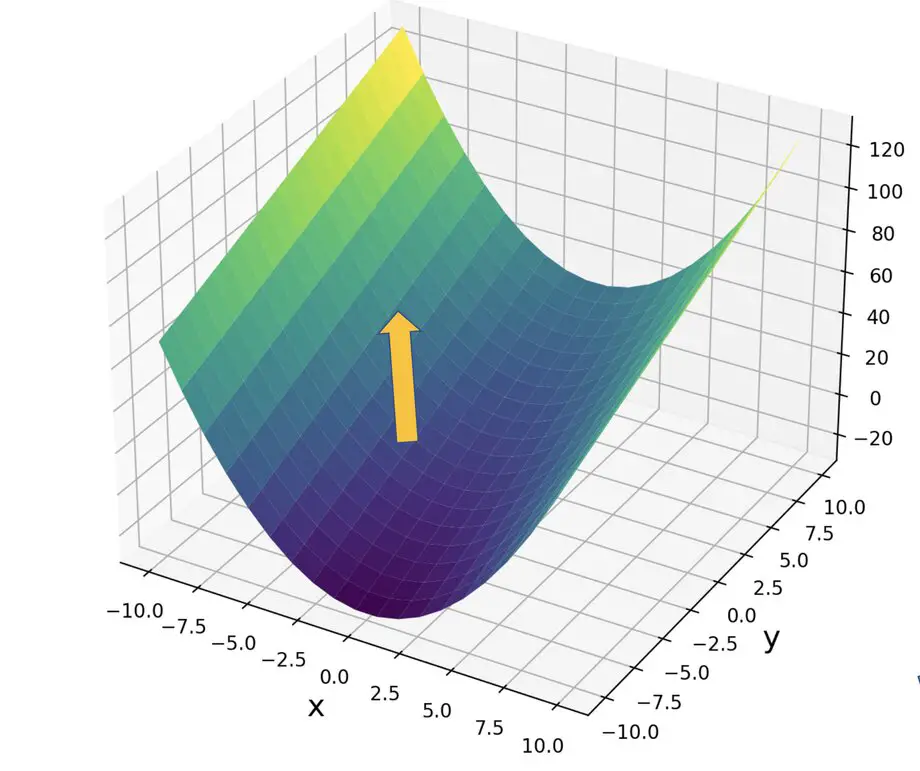

In practice, this calculation is very expensive. We would have to gradually discover the parameter values in the likelihood function that maximize the posterior using numerical optimization. In other words, we would have to perform the calculation over and over using different parameter values.

If, however, the prior is conjugate to the likelihood function, you can infer the posterior parameters directly from the prior without performing this expensive calculation to get the actual values.

Let’s illuminate this with an example.

Beta-Bernoulli Conjugacy

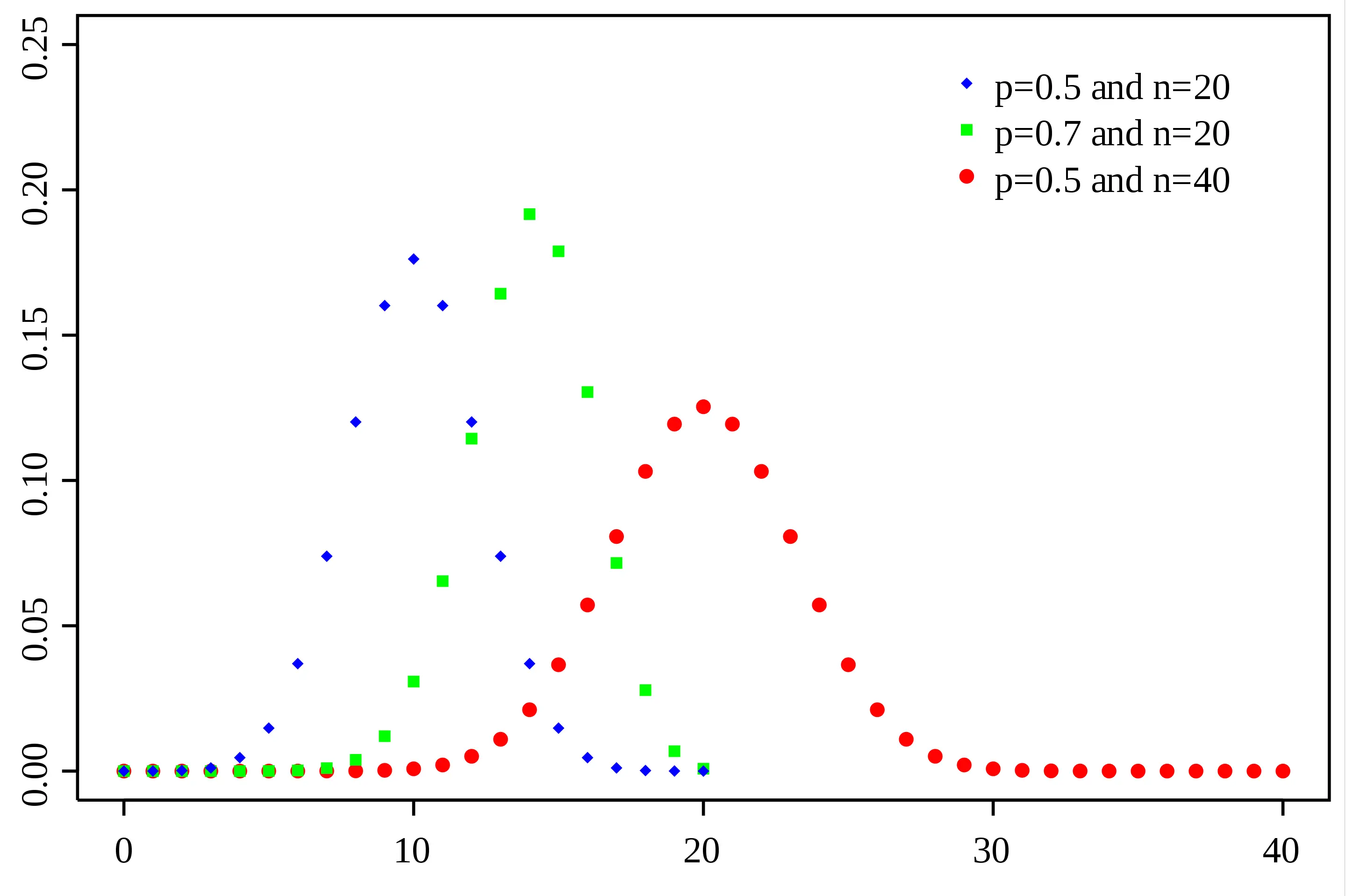

The Beta distribution models the distribution of Bernoulli trial probabilities. For problems that have a Bernoulli or Binomial likelihood function, the Beta distribution is a conjugate prior.

Accordingly, the posterior has to be of the same form as the prior. It also has to follow a Beta distribution.

Let’s say we have a Bernoulli function where we estimate the data distribution x given the parameter θ.

p(x|\theta) = \theta^x(1-\theta)^{1-x}θ follows a Beta prior distribution of the following form:

p(\theta | a,b) \propto \theta^{a-1} (1-\theta)^{1-\beta}To obtain the posterior, we use our Bayesian formula. Multiplying the Bernoulli likelihood function of θ with the Beta distributed conjugate prior should give us a Beta distributed posterior.

p(\theta | x,a,b) = p(x|\theta) p(\theta | a,b)

\propto \theta^x(1-\theta)^{1-x} \theta^{a-1} (1-\theta)^{1-\beta}= \theta^{a-1+x} (\theta-1)^{b-1+(1-x)}\propto \theta^{a+x} (\theta-1)^{b+(1-x)} As you can see, this last expression has the form of the beta distribution with the following parameters.

p(\theta|a+x,b+(1-x))

The beta distribution is a conjugate prior to several probability distributions such as the Bernoulli, or the Binomial. Normal distributions have conjugate priors that are themselves normally distributed.

This post is part of a series on statistics for machine learning and data science. To read other posts in this series, go to the index.