How to Find Vector Projections

In this post, we learn how to perform vector projections and scalar projections. In the process, we also look at the basis of a vector space and how to perform a change of basis.

What is a Vector Projection?

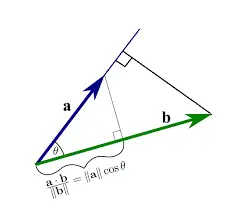

A vector projection of a vector a onto another vector b is the orthogonal projection of a onto b. To intuitively understand the concept of a vector projection, you can imagine the projection of a onto b as the shadow of a falling on b if the sun were to shine on b at a right angle.

If we want to project vector a onto b, we can imagine vector a and its projection on b as sides in a right-angled triangle.

Source: https://math.stackexchange.com/questions/3522452/projection-of-vector-onto-a-shorter-vector-allowed

How to find the Scalar Projection?

In the previous section on dot products, we’ve established that

a \cdot b = |a||b|\cos(\theta)

\frac{a \cdot b}{|b|} = |a|\cos(\theta)The expressions |a| and |b| represent the lengths of the vectors a and b.

From trigonometry, we know that

\cos(\theta) = \frac{adjacent}{hypotenuse}

\cos(\theta) \times hypotenuse = adjacent

In our triangle |a| (the length of a) is the hypotenuse, and the adjacent side is the projection of a onto b, therefore

projection(ab) = |a|\cos(\theta) =

\frac{a \cdot b}{|b|} This is also known as the scalar projection.

What’s the use of the scalar projection?

The scalar projection allows you to determine the direction of a vector a in relation to another vector b. If the scalar product is positive, the angle between the vectors is less than 90 degrees, meaning they are heading in a similar direction. When the scalar product is negative, the angle is > 90 degrees thus the vectors are heading in opposite directions.

It gives you the length of the projection of a onto b. If the sun were to shine on b at a right angle, it would be the length of the shadow of a on b.

How to find the Vector Projection?

Now, how do we find the actual vector that defines the projection of a onto b? Remember, the scalar projection has only given us the length of the projection. It hasn’t given us the actual vector.

It is actually quite easy.

The projection of a onto b points in the same direction as b. So all we need to do is take the vector b and scale it by the scalar projection. The easiest way to do this is to first calculate the unit vector and then simply multiply it by the length of the projection of a onto b.

proj_ba =

\frac{b}{|b|}

\frac{a \cdot b}{|b|}

=

\frac{a \cdot b}{|b|^2}b Performing a Change of Basis

Formally we define a basis of a vector space as a set of linearly independent vectors that span that vector space. What does this mean?

As you may remember, if you’ve read my post on linear independence, vectors orthogonal to each other are linearly independent.

Up until now, we’ve worked almost exclusively in the standard 2D coordinate system consisting of an x and a y-axis. This standard coordinate system can be considered a vector space, where the x-axis can be defined as a basis vector \begin{bmatrix}1\\0\end{bmatrix} and y as a basis vector \begin{bmatrix}0\\1\end{bmatrix}. As you see, the x and y axes are orthogonal to each other, thus linearly independent of each other. We can say they form an orthogonal basis. Theoretically, any set of linearly independent vectors can form their own vector space (their own coordinate system).

If V is a vector space with basis {v1, v2,…, vn}, then every vector v ∈ V can be written as a unique linear combination of v1, v2,…, vn.

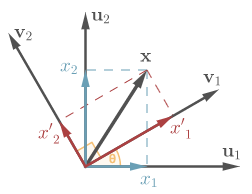

If you have a vector x represented in terms of the coordinates in your existing vector space U consisting of the basis vectors {u1, u2,…, un}, then you can represent that same vector x in a different vector space V consisting of {v1, v2,…, vn}.

Source: http://www.boris-belousov.net/2016/05/31/change-of-basis/

To get from x_U (x in the basis U) to x_V, you’ll perform the following steps:

- obtain the vector projections { x'_1, x'_2,…,x'_n} of x onto each {v_1, v_2,…,v_n}

- create the sum of all projections:

x_v = x'_1 + x'_2 + … +x'_n

Note: I’d like to reemphasize that our example only works if the new basis vectors are orthogonal to each other. If they are not, we can still perform the projection, but it would be more complicated involving matrix transforms.

Why do I need a new vector space?

A vector space is like a framework within which we can perform operations on vectors. Often, the given vector space is not enough to treat a problem. For example, the standard 2D coordinate system that you’ve used in high school is a vector space. It does not provide an appropriate framework to treat 3-dimensional problems.

Vector Spaces in Machine Learning

In data science and machine learning, we usually treat each feature as its own dimension in a vector space. These features might change. Some might be removed, others might be added. Thus, you need to be able to add or remove dimensions in your vector space.

In language modeling, it is common to treat each word as a feature. To be able to model any sentence in the English language, you’d need to have a vector space consisting of all English words. You’d end up with tens of thousands of dimensions which is computationally expensive. Now suppose you’d only want to capture sentences related to a specific domain. The vocabulary pertaining to that domain would presumably be enough, allowing you to reduce the dimensionality of your vector space.

Sometimes you may have to transfer a unit of data represented by a vector in an existing feature vector space into a new feature vector space because it makes mathematical operations and applying machine learning models easier.

This is where the change of basis of a vector and vector projections come in handy.

This post is part of a series on linear algebra for machine learning. To read other posts in this series, go to the index.