Dot Product and Orthogonal Vectors

In this post, we learn how to calculate the dot product between two vectors. Furthermore, we look at orthogonal vectors and see how they relate to the dot product.

What is the Inner Product?

The inner product of two vectors is the sum of the element-wise products of two vectors. The result of the inner product is a scalar. The inner product is defined over any finite or infinite-dimensional vector space. To calculate it you multiply each element in your first vector with the corresponding element in your second vector and take the sum of all products.

What is the Dot Product?

The dot product is a special case of the inner product that is limited to the real number space. Like the inner product, it is the sum of the element-wise products of two vectors.

In the examples that follow the distinction between the inner product and the dot product is irrelevant. We will henceforth refer to the dot product.

It is arguably one of the most powerful concepts in linear algebra. In machine learning, for example, it is at the core of neural networks. So how does it work?

How to Calculate the Dot Product?

There are two ways to calculate the dot product. We are going to look at each.

Option 1: Element by Element Multiplication

The first option is to obtain the sum over all element by element multiplications. Let’s go back to the two vectors we used in the beginning and call them a and b

a = \begin{bmatrix}4\\3\end{bmatrix} \, and \,

b = \begin{bmatrix}3\\4\end{bmatrix}Now the dot product resolves to:

a \cdot b = a_1 \times b_1 + a_2 \times b_2

In our case it would be

4 \times 3 + 3 \times 4 = 24

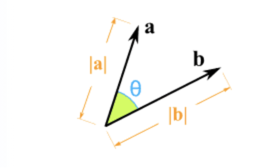

Option 2: Vector angles and length

Another option to calculate the dot product is via the angle between two vectors and their respective lengths.

Source: https://www.mathsisfun.com/algebra/vectors-dot-product.html

From trigonometry, we know the cosine rule, which says that for a triangle consisting of the sides |a|, |b|, |c|; we can calculate |c| using |a|, |b| and the cosine of the angle \theta between |a| and |b|

|c| = \sqrt(|a|^2 + |b|^2 -2|a||b|\cos(\theta))

from which we can further derive:

a \cdot b = |a||b|\cos(\theta)

If you are interested in how we arrived at this last formula, you can take a look at the following answer on math.stackexchange https://math.stackexchange.com/questions/116133/how-to-understand-dot-product-is-the-angles-cosine

The dot product is commutative, which basically means the order of the elements in the multiplication is irrelevant:

a \cdot b = b\cdot a

It is also distributive over addition. If we had three vectors a, b, c then:

a \cdot (b + c) = a \cdot b + a \cdot c

How to Calculate the Angle Between Two Vectors?

In the previous section we learned that we can calculate the dot product between two vectors either using the lengths of the vectors and the cosine of the angle between them, or by adding up the element wise products of the vector entries.

The two methods yield the same result so that for a 2D vector we can say:

a \cdot b = a_1 \times b_1 + a_2 \times b_2 = |a||b|\cos(\theta)

\frac{a \cdot b}{|a||b|} = \cos(\theta)Going back to our previous vectors a =\begin{bmatrix}4\\3\end{bmatrix} and b = \begin{bmatrix}3\\4\end{bmatrix} we can calculate their dot product

a \cdot b = a_1 \times b_1 + a_2 \times b_2 = 4 \times 3 + 3 \times 4 = 24

and the respective lengths of the vectors:

|a| = \sqrt(4^2 + 3^2) = 5 \, and \, |b| = \sqrt(3^2 + 4^2) = 5

therefore

|a||b| = 5 \times 5 = 25

Accordingly

\cos(\theta) = \frac{24}{25} = 0.96\theta = \arccos(0.96) = 16.26^\circ

Orthogonal Vectors

As you might remember from high school, when is a right angle (90 degrees), then

\cos(90^\circ) = 0

which in turn implies

a \cdot b = |a||b|0 = 0

We can also arrive at this conclusion by using the element wise multiplication of two perpendicular vectors. Let’s say we have two vectors

a = \begin{bmatrix}0\\1\end{bmatrix}, b = \begin{bmatrix}1\\0\end{bmatrix}a \cdot b = 0 \times 1 + 1 \times 0 = 0

In other words, the dot product of two perpendicular vectors is 0. We also say that a and b are orthogonal to each other. This is an extremely important implication of the dot product for reasons that you will learn if you keep reading.

This post is part of a series on linear algebra for machine learning. To read other posts in this series, go to the index.