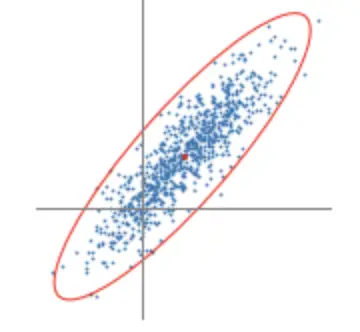

Eigenvalue Decomposition Explained

In this post, we learn how to decompose a matrix into its eigenvalues and eigenvectors. We also discuss the uses of the Eigendecomposition.

The eigenvalue decomposition or eigendecomposition is the process of decomposing a matrix into its eigenvectors and eigenvalues. We can also transform a matrix into an Eigenbasis (the basis matrix where every column is an eigenvector).

Why is the Eigendecomposition Useful?

Matrix operations such as transformations or multiplications are computationally expensive. In applications such as machine learning, you often have thousands or millions of dimensions. Imagine you have to perform matrix transformations repeatedly on matrices in millions of dimensions. Even the best computers quickly reach their limits. But as we discussed before, operations are much simpler on diagonal matrices. So if we can decompose a matrix into a diagonal form before we apply any kind of costly operation, it makes our lives, as well as the lives of our computers, much easier. Enter Eigendecomposition.

How the Eigendecomposition Works

Remember that for an eigenvector, multiplication with the transformation matrix A, is equivalent to multiplying with a simple scalar λ. If we expand this idea from vectors to matrices, most matrices can be decomposed into a matrix of column eigenvectors P and a diagonal matrix D that is filled with eigenvalues on the main diagonal. When we multiply P and D, each column (each eigenvector) would be multiplied with the corresponding scalar (each eigenvalue).

P = \begin{bmatrix}

v_{11} & v_{21} \\

v_{12} & v_{22} \\

\end{bmatrix}

\,

D = \begin{bmatrix}

\lambda_1 & 0 \\

0 & \lambda_2 \\

\end{bmatrix}In the article on the change of basis matrix, we’ve covered how to perform a transformation into a different basis. You multiply a vector with the corresponding basis matrix, perform the transform, and finally transform the vector back into its original basis using the identity of the transformation matrix. Similarly, you can use the eigenvector-matrix P to transform a vector to the eigenbasis. Now you can apply the transformation using the much simpler diagonal matrix D. Afterwards; you transform back to your original basis using the inverse of P.

A = PDP^{-1}What happens if you have to apply the transformation (let’s say a multiplication) several times?

AA = PDP^{-1}PDP^{-1} = PDIDP^{-1} = PD^{2}P^{-1}\\

A^{2} = PD^{2}P^{-1}As you see, you don’t have to transform back and forth between bases for every multiplication. You only need to transform to the eigenbasis once; then you can apply all the transformations you need to D. Lastly, you transform back to your original basis.

Let’s apply this to a matrix A with a vector v.

A =

\begin{bmatrix}

2 & 1 \\

1 & 2\\

\end{bmatrix} \,,

v =

\begin{bmatrix}

1 \\

1\\

\end{bmatrix}Recall that we arrived at the following eigenvalues.

\lambda = 1 \\ \lambda = 3

If you substitute this into our characteristic polynomial, you’ll get x= y for λ=1 and x = -y for λ=3. For the eigenvectors, remember that we can pick any value as long as these equations remain true.

e_1 = \begin{bmatrix}

1 \\

-1\\

\end{bmatrix}\,

e_2 = \begin{bmatrix}

1 \\

1\\

\end{bmatrix}Now we can decompose A into a matrix of eigenvectors and eigenvalues.

PDP^{-1} = \begin{bmatrix}

1 & 1\\

-1 & 1\\

\end{bmatrix}

\begin{bmatrix}

1 & 0\\

0 & 3\\

\end{bmatrix}

\begin{bmatrix}

0.5 & -0.5\\

0.5 & 0.5\\

\end{bmatrix}If we want to apply the transformation encapsulated by A 2 times, we could do it the classic way.

AA = A^{2} =

\begin{bmatrix}

2 & 1 \\

1 & 2\\

\end{bmatrix}

\begin{bmatrix}

2 & 1 \\

1 & 2\\

\end{bmatrix}

=

\begin{bmatrix}

5 & 4 \\

4 & 5\\

\end{bmatrix}The other and computationally more efficient way is by using the eigendecomposition.

PD^2P^{-1} = \begin{bmatrix}

1 & 1\\

-1 & 1\\

\end{bmatrix}

\begin{bmatrix}

1 & 0\\

0 & 9\\

\end{bmatrix}

\begin{bmatrix}

0.5 & -0.5\\

0.5 & 0.5\\

\end{bmatrix}

=

\begin{bmatrix}

5 & 4 \\

4 & 5\\

\end{bmatrix}As you can see, the result is the same.

This post is part of a series on linear algebra for machine learning. To read other posts in this series, go to the index.