What is the Von Neumann Architecture

The von Neumann architecture is a foundational computer hardware architecture that most modern computer systems are built upon. It consists of the control unit, the arithmetic, and logic unit, the memory unit, registers, and input and output devices.

The key features of the von Neumann architecture are:

- Data as well as the program operating on that data are stored in a single read-write memory unit.

- All contents stored in memory are addressable by storage location

- Execution of program instructions occurs in a sequential manner

The Significance of the Von Neumann Architecture

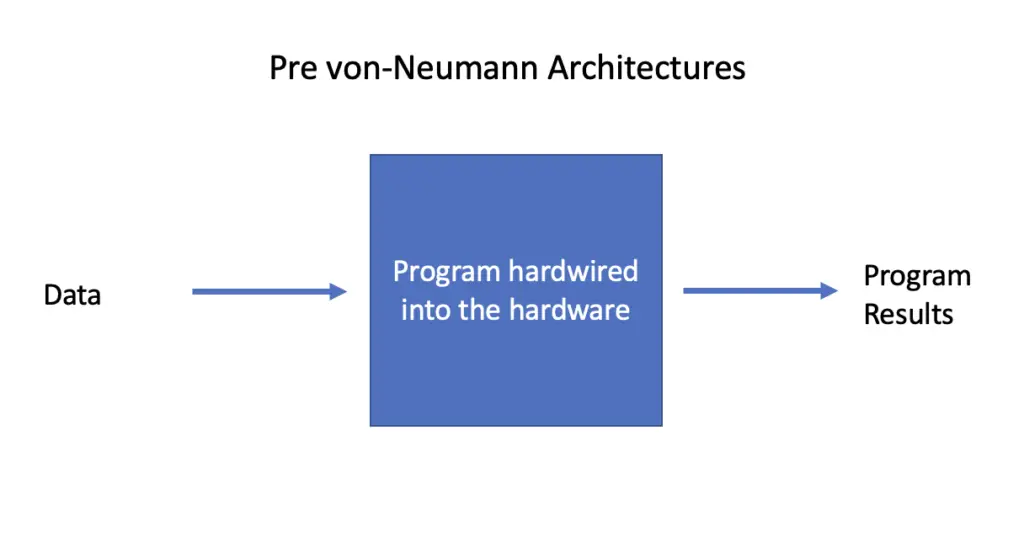

Up Until the von Neumann architecture emerged, a computer’s ability to execute a program was directly tied to its hardware configuration. In order to change the program, parts of the computer’s hardware had to be rebuilt and reorganized which entailed a lot of work and effort.

The Colossus

The first programmable digital computer was developed by Alan Turing and his colleagues at Bletchley Park in the UK during World War II. At that time the British government was looking for a way to crack German radio communications. The Germans and their allies were communicating via wireless radio signals using morse code. The signals were easy to intercept, but for a long time, they couldn’t be read by the Allies because the codes were encrypted using a device known as the Enigma machine.

The colossus had been designed previously by an engineer at the General Post Office to solve a particular mathematical problem. Turing built on the design of the colossus to successfully decipher intercepted Enigma messages.

The colossus went through several iterations that were kept secret for a long time. The architecture of the colossus was not very convenient for general-purpose computing, since it could not store program instructions. Instructions were programmed through hardware manipulation in the form of switches and plugs.

Von Neumann and the Rise of Software

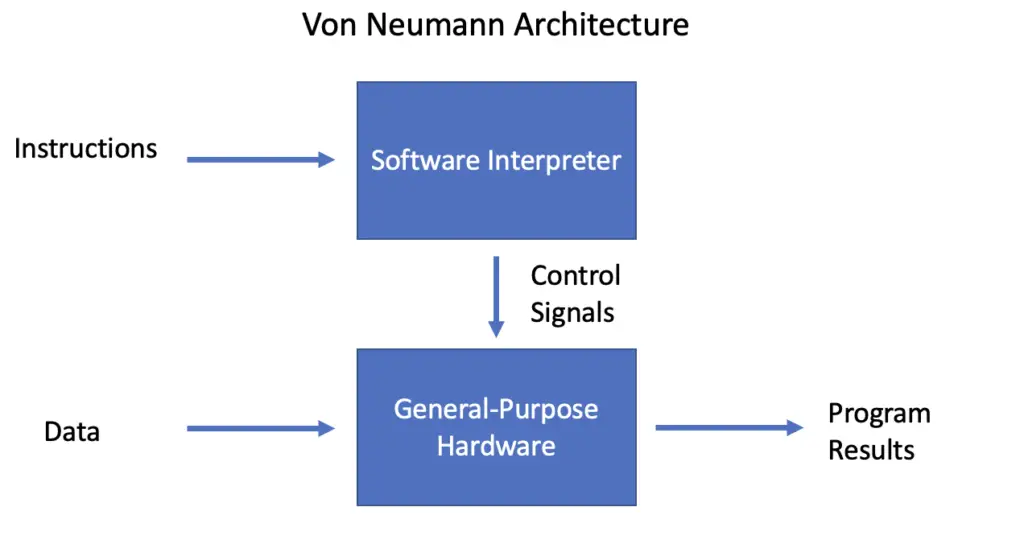

In 1945 John von Neumann, one of the luminaries of 20th-century computer science and math, proposed an architecture that would allow for re-programmability without changes to the computer’s hardware. The basic idea was that not only the data but also the program instructions themselves would be stored in digital memory rather than being expressed through hardware.

The instructions would be communicated to the unit performing the calculations through control signals rather than switches and plugs. This architecture requires an additional module to translate the instructions as received through the input device into control signals. These control signals along with the data would then be transmitted to a general purpose arithmetic and logic unit which would perform the calculations as instructed by the signals.

The instructions are provided to the module in the form of codes that became known as software. Instead of rebuilding the hardware each time, the programmer just had to change the codes he sent to the interpreter.

Components of the Von Neumann Architecture

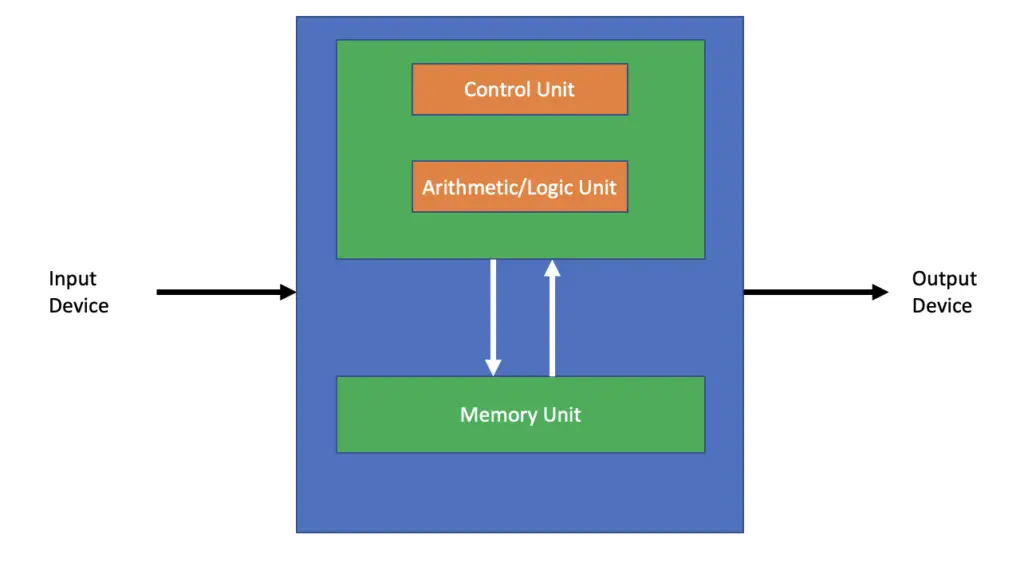

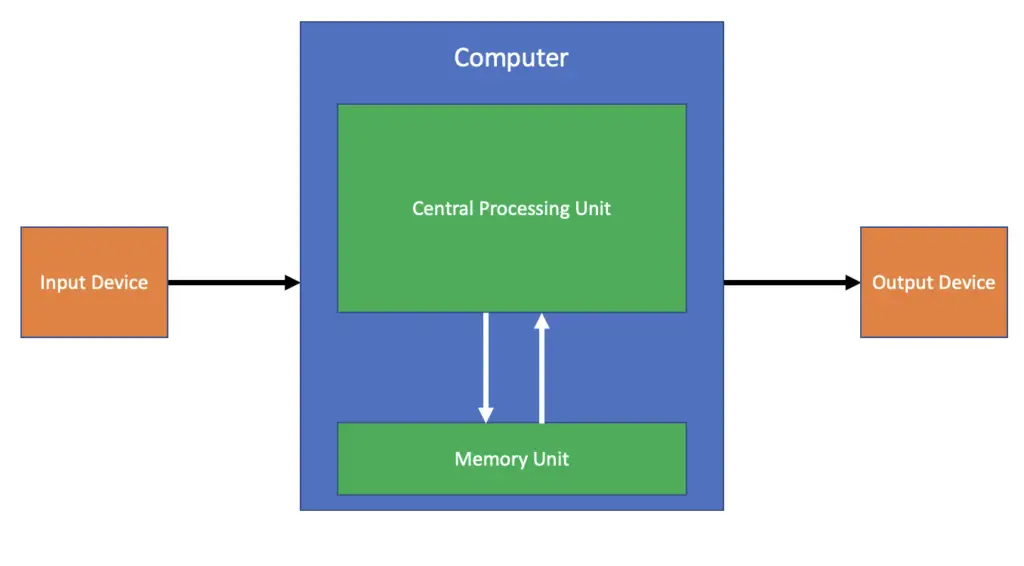

On a high level, a computer system consists of 3 main components:

- A CPU or Central Processing Unit to perform operations and calculations

- Memory to hold data and instructions

- Input/Output devices to send instructions and data to the computer and receive output generated by the computer.

Central Processing Unit

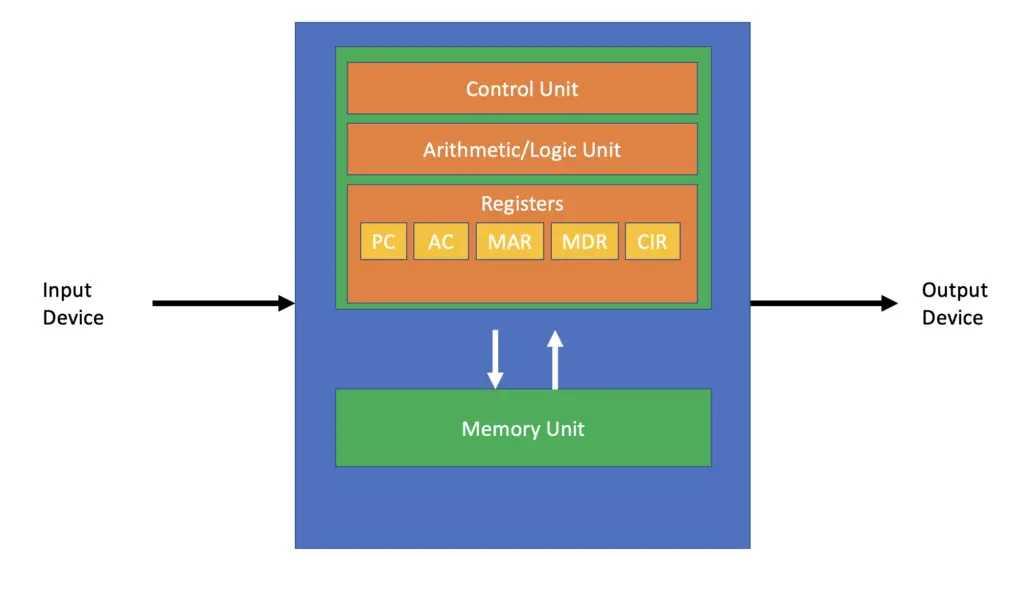

The central processing unit is the core part of the computer where programs are executed, and data is processed. It consists of several components.

Arithmetic Logic Unit

The arithmetic logic unit is responsible for performing the calculations and applying the rules provided by a program to the data. At a fundamental level, the ALU performs basic mathematical operations and boolean logic such as addition, subtraction, and comparisons like greater than, smaller than or equal to.

Control Unit

The control unit processes the program instructions and tells the arithmetic logic unit what to do on the basis of the instructions. It is also responsible for controlling how data moves around the computer system.

Registers

Registers are a type of short-term memory that is directly integrated into the CPU. They are used to store instructions and data is immediately used by the CPU. The CPU usually has the following registers:

- The Program Counter (PC) tracks the main memory address of the next program instruction that the CPU requires from the main memory and passes it to the memory address register.

- The Memory Address Register (MAR) holds the location of current program instruction in the memory unit that is about to be fetched from or written to memory. The memory address register allows the CPU to know where to retrieve the input or write the output of the current instruction. The memory address register only stores addresses in memory but not data and instructions.

- The Memory Data Register (MDR) temporarily stores the contents from main memory stored at the address held by the memory address register. Contrary to the memory address register, the memory data register stores actual data and instructions. If the content contains instructions they are passed on to the current instruction register.

- The Current Instruction Register (CIR) stores the most recent instruction before it is executed by the CPU.

- The Accumulator (AC) stores the results produced by the arithmetic logic unit.

Cache

The cache is an intermediate memory between RAM and the registers. Fetching data from the separate memory unit takes a long time. If computers fetched data directly from memory into the CPU for every small step of a calculation, the CPU would spend a lot of time doing nothing and waiting for the data to arrive from the memory unit. The cache is built directly into the CPU and much faster than RAM but also much more expensive to manufacture. Cache usually only stores those instructions frequently used by the CPU.

Memory Unit

As the name implies, the job of the memory unit is to store all the data and instructions that are required by the computer. Main memory holds Gigabytes worth of information in modern computers. It is also referred to as random access memory because the information stored in it can be accessed in any order. RAM can only be used for temporary storage since all the information is wiped out once the computer is shut off. Programs and data that need to survive for more than one session are therefore stored on secondary storage such as hard drives and are loaded into RAM once the computer needs to use them.

There is a second type of memory that stores information across sessions known as ROM or read-only memory. As the name implies, no new information can be written to ROM. ROM is used to store things like the startup program for your computer that should not be changed but has to be quickly accessible once the machine boots up.

A Note on Secondary Storage

Secondary Storage refers to things like hard drives that are used to store information across boot cycles. Strictly speaking, they are external devices and therefore not part of the classical von Neumann architecture. Accordingly, I won’t cover them in this post.

Bus Interconnections

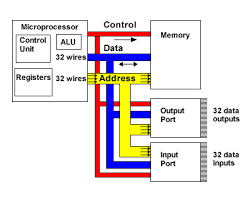

Busses are lanes that allow instructions and data to travel between components.

We distinguish between three types of buses.

- The control bus is used to transfer signals from the CPU to the memory and external I/O devices initiating read and write operations and ensuring that the operations are properly synchronized.

- Via the address bus, the CPU sends memory addresses instructing the memory unit or secondary storage where it wants information to be written to or read from.

- The data bus carries data between the CPU, the memory, and I/O devices. When performing write operations, the data will travel from the CPU to the memory unit and I/O devices. When performing read operations the data moves from memory and I/O devices to the CPU.

Instruction Set Architecture and Microarchitecture

The von Neumann architecture describes a way of building computers that makes it possible to program computers through software without the need to adjust the hardware for every new program.

But how do the programmers of the software know that the hardware can handle their instructions in software?

The instruction set architecture is an abstract machine model that defines the set of instructions that the processor can handle. These definitions include things like data types, types of operations and how they are executed, and execution semantics such as how to deal with interrupts. Thus, if two computers have the same instruction set architecture, the programmer knows that the software that runs on one computer will also run on the other one.

The microarchitecture describes how the instruction set architecture is implemented at a hardware level. It is concerned with the physical design of the processor such as the bus widths, size of the cache as well as conceptual questions like the ordering of executions. The job of the microarchitecture is to optimize performance across metrics such as speed, cost, and energy consumption.

The instruction set architecture tells the programmer whether the computer is compatible with the types of instructions that his or her software wants to execute. The microarchitecture tells the programmer whether the required performance can be achieved.

The Von Neumann Bottleneck

In von Neumann architectures the power of the CPU and the size of memory cannot be fully exploited because the data cannot be transported fast enough between memory and the CPU.

This problem arises because transfer between memory and the CPU is inherently sequential. We can try to increase the clock speed or the bus size to execute data transfer faster and at greater volumes. But that way we will very quickly run into some hard physical limitations.

Modern CPUs can usually process data at a much faster rate than it can be transferred to of from the CPU. As a consequence, the CPU will spend a lot of time idle.